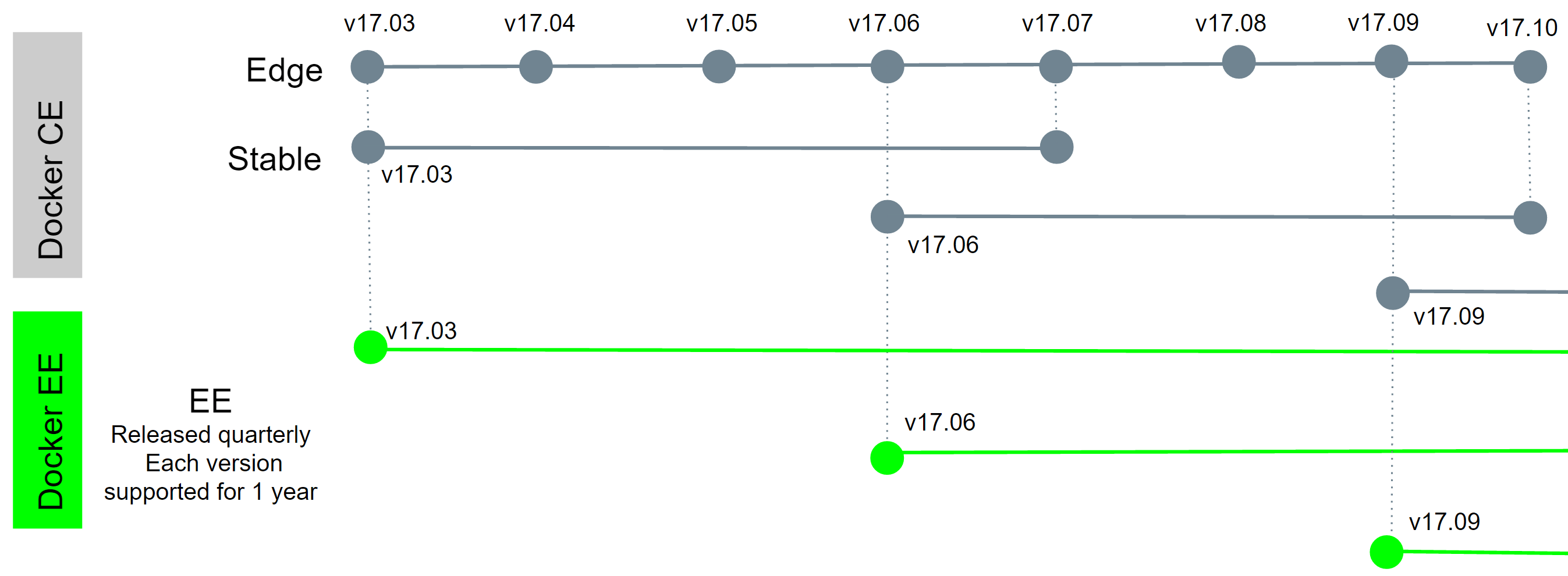

class: title, self-paced Container Orchestration<br/>with Docker and Swarm<br/> .debug[ ``` ``` These slides have been built from commit: afef6ca [common/title.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/title.md)] --- class: title, in-person Container Orchestration<br/>with Docker and Swarm<br/><br/></br> .footnote[ **Be kind to the WiFi!**<br/> <!-- *Use the 5G network.* --> *Don't use your hotspot.*<br/> *Don't stream videos or download big files during the workshop.*<br/> *Thank you!* **Slides: http://container.training/** ] .debug[[common/title.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/title.md)] --- ## A brief introduction - This was initially written to support in-person, instructor-led workshops and tutorials - You can also follow along on your own, at your own pace - We included as much information as possible in these slides - We recommend having a mentor to help you ... - ... Or be comfortable spending some time reading the Docker [documentation](https://docs.docker.com/) ... - ... And looking for answers in the [Docker forums](forums.docker.com), [StackOverflow](http://stackoverflow.com/questions/tagged/docker), and other outlets .debug[[common/intro.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/intro.md)] --- class: self-paced ## Hands on, you shall practice - Nobody ever became a Jedi by spending their lives reading Wookiepedia - Likewise, it will take more than merely *reading* these slides to make you an expert - These slides include *tons* of exercises and examples - They assume that you have access to some Docker nodes - If you are attending a workshop or tutorial: <br/>you will be given specific instructions to access your cluster - If you are doing this on your own: <br/>the first chapter will give you various options to get your own cluster .debug[[common/intro.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/intro.md)] --- ## About these slides - All the content is available in a public GitHub repository: https://github.com/jpetazzo/container.training - You can get updated "builds" of the slides there: http://container.training/ <!-- .exercise[ ```open https://github.com/jpetazzo/container.training``` ```open http://container.training/``` ] --> -- - Typos? Mistakes? Questions? Feel free to hover over the bottom of the slide ... .footnote[.emoji[👇] Try it! The source file will be shown and you can view it on GitHub and fork and edit it.] <!-- .exercise[ ```open https://github.com/jpetazzo/container.training/tree/master/slides/common/intro.md``` ] --> .debug[[common/intro.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/intro.md)] --- name: toc-chapter-1 ## Chapter 1 - [Pre-requirements](#toc-pre-requirements) - [Our sample application](#toc-our-sample-application) - [Running the application](#toc-running-the-application) - [Identifying bottlenecks](#toc-identifying-bottlenecks) - [SwarmKit](#toc-swarmkit) - [Declarative vs imperative](#toc-declarative-vs-imperative) - [Swarm mode](#toc-swarm-mode) - [Creating our first Swarm](#toc-creating-our-first-swarm) .debug[(auto-generated TOC)] --- name: toc-chapter-2 ## Chapter 2 - [Running our first Swarm service](#toc-running-our-first-swarm-service) - [Our app on Swarm](#toc-our-app-on-swarm) - [Hosting our own registry](#toc-hosting-our-own-registry) - [Global scheduling](#toc-global-scheduling) - [Integration with Compose](#toc-integration-with-compose) .debug[(auto-generated TOC)] --- name: toc-chapter-3 ## Chapter 3 - [Securing overlay networks](#toc-securing-overlay-networks) - [Updating services](#toc-updating-services) - [Rolling updates](#toc-rolling-updates) - [SwarmKit debugging tools](#toc-swarmkit-debugging-tools) .debug[(auto-generated TOC)] --- name: toc-chapter-4 ## Chapter 4 - [Secrets management and encryption at rest](#toc-secrets-management-and-encryption-at-rest) - [Least privilege model](#toc-least-privilege-model) - [Dealing with stateful services](#toc-dealing-with-stateful-services) - [Links and resources](#toc-links-and-resources) .debug[(auto-generated TOC)] .debug[[common/toc.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/toc.md)] --- class: pic .interstitial[] --- name: toc-pre-requirements class: title Pre-requirements .nav[ [Previous section](#toc-) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-our-sample-application) ] .debug[(automatically generated title slide)] --- # Pre-requirements - Be comfortable with the UNIX command line - navigating directories - editing files - a little bit of bash-fu (environment variables, loops) - Some Docker knowledge - `docker run`, `docker ps`, `docker build` - ideally, you know how to write a Dockerfile and build it <br/> (even if it's a `FROM` line and a couple of `RUN` commands) - It's totally OK if you are not a Docker expert! .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: extra-details ## Extra details - This slide should have a little magnifying glass in the top left corner (If it doesn't, it's because CSS is hard — Jérôme is only a backend person, alas) - Slides with that magnifying glass indicate slides providing extra details - Feel free to skip them if you're in a hurry! .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: title *Tell me and I forget.* <br/> *Teach me and I remember.* <br/> *Involve me and I learn.* Misattributed to Benjamin Franklin [(Probably inspired by Chinese Confucian philosopher Xunzi)](https://www.barrypopik.com/index.php/new_york_city/entry/tell_me_and_i_forget_teach_me_and_i_may_remember_involve_me_and_i_will_lear/) .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- ## Hands-on sections - The whole workshop is hands-on - We are going to build, ship, and run containers! - You are invited to reproduce all the demos - All hands-on sections are clearly identified, like the gray rectangle below .exercise[ - This is the stuff you're supposed to do! - Go to [swarm2017.container.training](http://swarm2017.container.training/) to view these slides - Join the chat room on [Slack](https://dockercommunity.slack.com/messages/C7GKACWDV) <!-- ```open http://container.training/``` --> ] .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: in-person ## Where are we going to run our containers? .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: in-person, pic  .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: in-person ## You get five VMs - Each person gets 5 private VMs (not shared with anybody else) - They'll remain up for the duration of the workshop - You should have a little card with login+password+IP addresses - You can automatically SSH from one VM to another - The nodes have aliases: `node1`, `node2`, etc. .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: in-person ## Why don't we run containers locally? - Installing that stuff can be hard on some machines (32 bits CPU or OS... Laptops without administrator access... etc.) - *"The whole team downloaded all these container images from the WiFi! <br/>... and it went great!"* (Literally no-one ever) - All you need is a computer (or even a phone or tablet!), with: - an internet connection - a web browser - an SSH client .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: in-person ## SSH clients - On Linux, OS X, FreeBSD... you are probably all set - On Windows, get one of these: - [putty](http://www.putty.org/) - Microsoft [Win32 OpenSSH](https://github.com/PowerShell/Win32-OpenSSH/wiki/Install-Win32-OpenSSH) - [Git BASH](https://git-for-windows.github.io/) - [MobaXterm](http://mobaxterm.mobatek.net/) - On Android, [JuiceSSH](https://juicessh.com/) ([Play Store](https://play.google.com/store/apps/details?id=com.sonelli.juicessh)) works pretty well - Nice-to-have: [Mosh](https://mosh.org/) instead of SSH, if your internet connection tends to lose packets <br/>(available with `(apt|yum|brew) install mosh`; then connect with `mosh user@host`) .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: in-person ## Connecting to our lab environment .exercise[ - Log into the first VM (`node1`) with SSH or MOSH <!-- ```bash for N in $(seq 1 5); do ssh -o StrictHostKeyChecking=no node$N true done ``` ```bash if which kubectl; then kubectl get all -o name | grep -v services/kubernetes | xargs -n1 kubectl delete fi ``` --> - Check that you can SSH (without password) to `node2`: ```bash ssh node2 ``` - Type `exit` or `^D` to come back to node1 <!-- ```bash exit``` --> ] If anything goes wrong — ask for help! .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- ## Doing or re-doing the workshop on your own? - Use something like [Play-With-Docker](http://play-with-docker.com/) or [Play-With-Kubernetes](https://medium.com/@marcosnils/introducing-pwk-play-with-k8s-159fcfeb787b) Zero setup effort; but environment are short-lived and might have limited resources - Create your own cluster (local or cloud VMs) Small setup effort; small cost; flexible environments - Create a bunch of clusters for you and your friends ([instructions](https://github.com/jpetazzo/container.training/tree/master/prepare-vms)) Bigger setup effort; ideal for group training .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- class: self-paced ## Get your own Docker nodes - If you already have some Docker nodes: great! - If not: let's get some thanks to Play-With-Docker .exercise[ - Go to http://www.play-with-docker.com/ - Log in - Create your first node <!-- ```open http://www.play-with-docker.com/``` --> ] You will need a Docker ID to use Play-With-Docker. (Creating a Docker ID is free.) .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- ## We will (mostly) interact with node1 only *These remarks apply only when using multiple nodes, of course.* - Unless instructed, **all commands must be run from the first VM, `node1`** - We will only checkout/copy the code on `node1` - During normal operations, we do not need access to the other nodes - If we had to troubleshoot issues, we would use a combination of: - SSH (to access system logs, daemon status...) - Docker API (to check running containers and container engine status) .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- ## Terminals Once in a while, the instructions will say: <br/>"Open a new terminal." There are multiple ways to do this: - create a new window or tab on your machine, and SSH into the VM; - use screen or tmux on the VM and open a new window from there. You are welcome to use the method that you feel the most comfortable with. .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- ## Tmux cheatsheet - Ctrl-b c → creates a new window - Ctrl-b n → go to next window - Ctrl-b p → go to previous window - Ctrl-b " → split window top/bottom - Ctrl-b % → split window left/right - Ctrl-b Alt-1 → rearrange windows in columns - Ctrl-b Alt-2 → rearrange windows in rows - Ctrl-b arrows → navigate to other windows - Ctrl-b d → detach session - tmux attach → reattach to session .debug[[common/prereqs.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/prereqs.md)] --- ## Brand new versions! - Engine 17.12 - Compose 1.17 - Machine 0.13 .exercise[ - Check all installed versions: ```bash docker version docker-compose -v docker-machine -v ``` ] .debug[[swarm/versions.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/versions.md)] --- ## Wait, what, 17.12 ?!? -- - Docker 1.13 = Docker 17.03 (year.month, like Ubuntu) - Every month, there is a new "edge" release (with new features) - Every quarter, there is a new "stable" release - Docker CE releases are maintained 4+ months - Docker EE releases are maintained 12+ months - For more details, check the [Docker EE announcement blog post](https://blog.docker.com/2017/03/docker-enterprise-edition/) .debug[[swarm/versions.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/versions.md)] --- class: extra-details ## Docker CE vs Docker EE - Docker EE: - $$$ - certification for select distros, clouds, and plugins - advanced management features (fine-grained access control, security scanning...) - Docker CE: - free - available through Docker Mac, Docker Windows, and major Linux distros - perfect for individuals and small organizations .debug[[swarm/versions.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/versions.md)] --- class: extra-details ## Why? - More readable for enterprise users (i.e. the very nice folks who are kind enough to pay us big $$$ for our stuff) - No impact for the community (beyond CE/EE suffix and version numbering change) - Both trains leverage the same open source components (containerd, libcontainer, SwarmKit...) - More predictible release schedule (see next slide) .debug[[swarm/versions.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/versions.md)] --- class: pic  .debug[[swarm/versions.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/versions.md)] --- ## What was added when? |||| | ---- | ----- | --- | | 2015 | 1.9 | Overlay (multi-host) networking, network/IPAM plugins | 2016 | 1.10 | Embedded dynamic DNS | 2016 | 1.11 | DNS round robin load balancing | 2016 | 1.12 | Swarm mode, routing mesh, encrypted networking, healthchecks | 2017 | 1.13 | Stacks, attachable overlays, image squash and compress | 2017 | 1.13 | Windows Server 2016 Swarm mode | 2017 | 17.03 | Secrets | 2017 | 17.04 | Update rollback, placement preferences (soft constraints) | 2017 | 17.05 | Multi-stage image builds, service logs | 2017 | 17.06 | Swarm configs, node/service events | 2017 | 17.06 | Windows Server 2016 Swarm overlay networks, secrets .debug[[swarm/versions.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/versions.md)] --- class: pic .interstitial[] --- name: toc-our-sample-application class: title Our sample application .nav[ [Previous section](#toc-pre-requirements) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-running-the-application) ] .debug[(automatically generated title slide)] --- # Our sample application - Visit the GitHub repository with all the materials of this workshop: <br/>https://github.com/jpetazzo/container.training - The application is in the [dockercoins]( https://github.com/jpetazzo/container.training/tree/master/dockercoins) subdirectory - Let's look at the general layout of the source code: there is a Compose file [docker-compose.yml]( https://github.com/jpetazzo/container.training/blob/master/dockercoins/docker-compose.yml) ... ... and 4 other services, each in its own directory: - `rng` = web service generating random bytes - `hasher` = web service computing hash of POSTed data - `worker` = background process using `rng` and `hasher` - `webui` = web interface to watch progress .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: extra-details ## Compose file format version *Particularly relevant if you have used Compose before...* - Compose 1.6 introduced support for a new Compose file format (aka "v2") - Services are no longer at the top level, but under a `services` section - There has to be a `version` key at the top level, with value `"2"` (as a string, not an integer) - Containers are placed on a dedicated network, making links unnecessary - There are other minor differences, but upgrade is easy and straightforward .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Links, naming, and service discovery - Containers can have network aliases (resolvable through DNS) - Compose file version 2+ makes each container reachable through its service name - Compose file version 1 did require "links" sections - Our code can connect to services using their short name (instead of e.g. IP address or FQDN) - Network aliases are automatically namespaced (i.e. you can have multiple apps declaring and using a service named `database`) .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Example in `worker/worker.py` ```python redis = Redis("`redis`") def get_random_bytes(): r = requests.get("http://`rng`/32") return r.content def hash_bytes(data): r = requests.post("http://`hasher`/", data=data, headers={"Content-Type": "application/octet-stream"}) ``` (Full source code available [here]( https://github.com/jpetazzo/container.training/blob/8279a3bce9398f7c1a53bdd95187c53eda4e6435/dockercoins/worker/worker.py#L17 )) .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## What's this application? -- - It is a DockerCoin miner! .emoji[💰🐳📦🚢] -- - No, you can't buy coffee with DockerCoins -- - How DockerCoins works: - `worker` asks to `rng` to generate a few random bytes - `worker` feeds these bytes into `hasher` - and repeat forever! - every second, `worker` updates `redis` to indicate how many loops were done - `webui` queries `redis`, and computes and exposes "hashing speed" in your browser .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Getting the application source code - We will clone the GitHub repository - The repository also contains scripts and tools that we will use through the workshop .exercise[ <!-- ```bash if [ -d container.training ]; then mv container.training container.training.$$ fi ``` --> - Clone the repository on `node1`: ```bash git clone git://github.com/jpetazzo/container.training ``` ] (You can also fork the repository on GitHub and clone your fork if you prefer that.) .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: pic .interstitial[] --- name: toc-running-the-application class: title Running the application .nav[ [Previous section](#toc-our-sample-application) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-identifying-bottlenecks) ] .debug[(automatically generated title slide)] --- # Running the application Without further ado, let's start our application. .exercise[ - Go to the `dockercoins` directory, in the cloned repo: ```bash cd ~/container.training/dockercoins ``` - Use Compose to build and run all containers: ```bash docker-compose up ``` <!-- ```longwait units of work done``` ```keys ^C``` --> ] Compose tells Docker to build all container images (pulling the corresponding base images), then starts all containers, and displays aggregated logs. .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Lots of logs - The application continuously generates logs - We can see the `worker` service making requests to `rng` and `hasher` - Let's put that in the background .exercise[ - Stop the application by hitting `^C` ] - `^C` stops all containers by sending them the `TERM` signal - Some containers exit immediately, others take longer <br/>(because they don't handle `SIGTERM` and end up being killed after a 10s timeout) .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Restarting in the background - Many flags and commands of Compose are modeled after those of `docker` .exercise[ - Start the app in the background with the `-d` option: ```bash docker-compose up -d ``` - Check that our app is running with the `ps` command: ```bash docker-compose ps ``` ] `docker-compose ps` also shows the ports exposed by the application. .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: extra-details ## Viewing logs - The `docker-compose logs` command works like `docker logs` .exercise[ - View all logs since container creation and exit when done: ```bash docker-compose logs ``` - Stream container logs, starting at the last 10 lines for each container: ```bash docker-compose logs --tail 10 --follow ``` <!-- ```wait units of work done``` ```keys ^C``` --> ] Tip: use `^S` and `^Q` to pause/resume log output. .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: extra-details ## Upgrading from Compose 1.6 .warning[The `logs` command has changed between Compose 1.6 and 1.7!] - Up to 1.6 - `docker-compose logs` is the equivalent of `logs --follow` - `docker-compose logs` must be restarted if containers are added - Since 1.7 - `--follow` must be specified explicitly - new containers are automatically picked up by `docker-compose logs` .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Connecting to the web UI - The `webui` container exposes a web dashboard; let's view it .exercise[ - With a web browser, connect to `node1` on port 8000 - Remember: the `nodeX` aliases are valid only on the nodes themselves - In your browser, you need to enter the IP address of your node <!-- ```open http://node1:8000``` --> ] A drawing area should show up, and after a few seconds, a blue graph will appear. .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: self-paced, extra-details ## If the graph doesn't load If you just see a `Page not found` error, it might be because your Docker Engine is running on a different machine. This can be the case if: - you are using the Docker Toolbox - you are using a VM (local or remote) created with Docker Machine - you are controlling a remote Docker Engine When you run DockerCoins in development mode, the web UI static files are mapped to the container using a volume. Alas, volumes can only work on a local environment, or when using Docker4Mac or Docker4Windows. How to fix this? Edit `dockercoins.yml` and comment out the `volumes` section, and try again. .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: extra-details ## Why does the speed seem irregular? - It *looks like* the speed is approximately 4 hashes/second - Or more precisely: 4 hashes/second, with regular dips down to zero - Why? -- class: extra-details - The app actually has a constant, steady speed: 3.33 hashes/second <br/> (which corresponds to 1 hash every 0.3 seconds, for *reasons*) - Yes, and? .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: extra-details ## The reason why this graph is *not awesome* - The worker doesn't update the counter after every loop, but up to once per second - The speed is computed by the browser, checking the counter about once per second - Between two consecutive updates, the counter will increase either by 4, or by 0 - The perceived speed will therefore be 4 - 4 - 4 - 0 - 4 - 4 - 0 etc. - What can we conclude from this? -- class: extra-details - Jérôme is clearly incapable of writing good frontend code .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Scaling up the application - Our goal is to make that performance graph go up (without changing a line of code!) -- - Before trying to scale the application, we'll figure out if we need more resources (CPU, RAM...) - For that, we will use good old UNIX tools on our Docker node .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Looking at resource usage - Let's look at CPU, memory, and I/O usage .exercise[ - run `top` to see CPU and memory usage (you should see idle cycles) <!-- ```bash top``` ```wait Tasks``` ```keys ^C``` --> - run `vmstat 1` to see I/O usage (si/so/bi/bo) <br/>(the 4 numbers should be almost zero, except `bo` for logging) <!-- ```bash vmstat 1``` ```wait memory``` ```keys ^C``` --> ] We have available resources. - Why? - How can we use them? .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Scaling workers on a single node - Docker Compose supports scaling - Let's scale `worker` and see what happens! .exercise[ - Start one more `worker` container: ```bash docker-compose scale worker=2 ``` - Look at the performance graph (it should show a x2 improvement) - Look at the aggregated logs of our containers (`worker_2` should show up) - Look at the impact on CPU load with e.g. top (it should be negligible) ] .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Adding more workers - Great, let's add more workers and call it a day, then! .exercise[ - Start eight more `worker` containers: ```bash docker-compose scale worker=10 ``` - Look at the performance graph: does it show a x10 improvement? - Look at the aggregated logs of our containers - Look at the impact on CPU load and memory usage ] .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: pic .interstitial[] --- name: toc-identifying-bottlenecks class: title Identifying bottlenecks .nav[ [Previous section](#toc-running-the-application) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-swarmkit) ] .debug[(automatically generated title slide)] --- # Identifying bottlenecks - You should have seen a 3x speed bump (not 10x) - Adding workers didn't result in linear improvement - *Something else* is slowing us down -- - ... But what? -- - The code doesn't have instrumentation - Let's use state-of-the-art HTTP performance analysis! <br/>(i.e. good old tools like `ab`, `httping`...) .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Accessing internal services - `rng` and `hasher` are exposed on ports 8001 and 8002 - This is declared in the Compose file: ```yaml ... rng: build: rng ports: - "8001:80" hasher: build: hasher ports: - "8002:80" ... ``` .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Measuring latency under load We will use `httping`. .exercise[ - Check the latency of `rng`: ```bash httping -c 3 localhost:8001 ``` - Check the latency of `hasher`: ```bash httping -c 3 localhost:8002 ``` ] `rng` has a much higher latency than `hasher`. .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Let's draw hasty conclusions - The bottleneck seems to be `rng` - *What if* we don't have enough entropy and can't generate enough random numbers? - We need to scale out the `rng` service on multiple machines! Note: this is a fiction! We have enough entropy. But we need a pretext to scale out. (In fact, the code of `rng` uses `/dev/urandom`, which never runs out of entropy... <br/> ...and is [just as good as `/dev/random`](http://www.slideshare.net/PacSecJP/filippo-plain-simple-reality-of-entropy).) .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- ## Clean up - Before moving on, let's remove those containers .exercise[ - Tell Compose to remove everything: ```bash docker-compose down ``` ] .debug[[common/sampleapp.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/sampleapp.md)] --- class: pic .interstitial[] --- name: toc-swarmkit class: title SwarmKit .nav[ [Previous section](#toc-identifying-bottlenecks) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-declarative-vs-imperative) ] .debug[(automatically generated title slide)] --- # SwarmKit - [SwarmKit](https://github.com/docker/swarmkit) is an open source toolkit to build multi-node systems - It is a reusable library, like libcontainer, libnetwork, vpnkit ... - It is a plumbing part of the Docker ecosystem -- .footnote[.emoji[🐳] Did you know that кит means "whale" in Russian?] .debug[[swarm/swarmkit.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmkit.md)] --- ## SwarmKit features - Highly-available, distributed store based on [Raft]( https://en.wikipedia.org/wiki/Raft_%28computer_science%29) <br/>(avoids depending on an external store: easier to deploy; higher performance) - Dynamic reconfiguration of Raft without interrupting cluster operations - *Services* managed with a *declarative API* <br/>(implementing *desired state* and *reconciliation loop*) - Integration with overlay networks and load balancing - Strong emphasis on security: - automatic TLS keying and signing; automatic cert rotation - full encryption of the data plane; automatic key rotation - least privilege architecture (single-node compromise ≠ cluster compromise) - on-disk encryption with optional passphrase .debug[[swarm/swarmkit.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmkit.md)] --- class: extra-details ## Where is the key/value store? - Many orchestration systems use a key/value store backed by a consensus algorithm <br/> (k8s→etcd→Raft, mesos→zookeeper→ZAB, etc.) - SwarmKit implements the Raft algorithm directly <br/> (Nomad is similar; thanks [@cbednarski](https://twitter.com/@cbednarski), [@diptanu](https://twitter.com/diptanu) and others for pointing it out!) - Analogy courtesy of [@aluzzardi](https://twitter.com/aluzzardi): *It's like B-Trees and RDBMS. They are different layers, often associated. But you don't need to bring up a full SQL server when all you need is to index some data.* - As a result, the orchestrator has direct access to the data <br/> (the main copy of the data is stored in the orchestrator's memory) - Simpler, easier to deploy and operate; also faster .debug[[swarm/swarmkit.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmkit.md)] --- ## SwarmKit concepts (1/2) - A *cluster* will be at least one *node* (preferably more) - A *node* can be a *manager* or a *worker* - A *manager* actively takes part in the Raft consensus, and keeps the Raft log - You can talk to a *manager* using the SwarmKit API - One *manager* is elected as the *leader*; other managers merely forward requests to it - The *workers* get their instructions from the *managers* - Both *workers* and *managers* can run containers .debug[[swarm/swarmkit.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmkit.md)] --- ## Illustration On the next slide: - whales = nodes (workers and managers) - monkeys = managers - purple monkey = leader - grey monkeys = followers - dotted triangle = raft protocol .debug[[swarm/swarmkit.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmkit.md)] --- class: pic  .debug[[swarm/swarmkit.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmkit.md)] --- ## SwarmKit concepts (2/2) - The *managers* expose the SwarmKit API - Using the API, you can indicate that you want to run a *service* - A *service* is specified by its *desired state*: which image, how many instances... - The *leader* uses different subsystems to break down services into *tasks*: <br/>orchestrator, scheduler, allocator, dispatcher - A *task* corresponds to a specific container, assigned to a specific *node* - *Nodes* know which *tasks* should be running, and will start or stop containers accordingly (through the Docker Engine API) You can refer to the [NOMENCLATURE](https://github.com/docker/swarmkit/blob/master/design/nomenclature.md) in the SwarmKit repo for more details. .debug[[swarm/swarmkit.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmkit.md)] --- class: pic .interstitial[] --- name: toc-declarative-vs-imperative class: title Declarative vs imperative .nav[ [Previous section](#toc-swarmkit) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-swarm-mode) ] .debug[(automatically generated title slide)] --- # Declarative vs imperative - Our container orchestrator puts a very strong emphasis on being *declarative* - Declarative: *I would like a cup of tea.* - Imperative: *Boil some water. Pour it in a teapot. Add tea leaves. Steep for a while. Serve in cup.* -- - Declarative seems simpler at first ... -- - ... As long as you know how to brew tea .debug[[common/declarative.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/declarative.md)] --- ## Declarative vs imperative - What declarative would really be: *I want a cup of tea, obtained by pouring an infusion¹ of tea leaves in a cup.* -- *¹An infusion is obtained by letting the object steep a few minutes in hot² water.* -- *²Hot liquid is obtained by pouring it in an appropriate container³ and setting it on a stove.* -- *³Ah, finally, containers! Something we know about. Let's get to work, shall we?* -- .footnote[Did you know there was an [ISO standard](https://en.wikipedia.org/wiki/ISO_3103) specifying how to brew tea?] .debug[[common/declarative.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/declarative.md)] --- ## Declarative vs imperative - Imperative systems: - simpler - if a task is interrupted, we have to restart from scratch - Declarative systems: - if a task is interrupted (or if we show up to the party half-way through), we can figure out what's missing and do only what's necessary - we need to be able to *observe* the system - ... and compute a "diff" between *what we have* and *what we want* .debug[[common/declarative.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/declarative.md)] --- class: pic .interstitial[] --- name: toc-swarm-mode class: title Swarm mode .nav[ [Previous section](#toc-declarative-vs-imperative) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-creating-our-first-swarm) ] .debug[(automatically generated title slide)] --- # Swarm mode - Since version 1.12, the Docker Engine embeds SwarmKit - All the SwarmKit features are "asleep" until you enable "Swarm mode" - Examples of Swarm Mode commands: - `docker swarm` (enable Swarm mode; join a Swarm; adjust cluster parameters) - `docker node` (view nodes; promote/demote managers; manage nodes) - `docker service` (create and manage services) ??? - The Docker API exposes the same concepts - The SwarmKit API is also exposed (on a separate socket) .debug[[swarm/swarmmode.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmmode.md)] --- ## Swarm mode needs to be explicitly activated - By default, all this new code is inactive - Swarm mode can be enabled, "unlocking" SwarmKit functions <br/>(services, out-of-the-box overlay networks, etc.) .exercise[ - Try a Swarm-specific command: ```bash docker node ls ``` <!-- Ignore errors: ```wait not a swarm manager``` --> ] -- You will get an error message: ``` Error response from daemon: This node is not a swarm manager. [...] ``` .debug[[swarm/swarmmode.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmmode.md)] --- class: pic .interstitial[] --- name: toc-creating-our-first-swarm class: title Creating our first Swarm .nav[ [Previous section](#toc-swarm-mode) | [Back to table of contents](#toc-chapter-1) | [Next section](#toc-running-our-first-swarm-service) ] .debug[(automatically generated title slide)] --- # Creating our first Swarm - The cluster is initialized with `docker swarm init` - This should be executed on a first, seed node - .warning[DO NOT execute `docker swarm init` on multiple nodes!] You would have multiple disjoint clusters. .exercise[ - Create our cluster from node1: ```bash docker swarm init ``` ] -- class: advertise-addr If Docker tells you that it `could not choose an IP address to advertise`, see next slide! .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: advertise-addr ## IP address to advertise - When running in Swarm mode, each node *advertises* its address to the others <br/> (i.e. it tells them *"you can contact me on 10.1.2.3:2377"*) - If the node has only one IP address, it is used automatically <br/> (The addresses of the loopback interface and the Docker bridge are ignored) - If the node has multiple IP addresses, you **must** specify which one to use <br/> (Docker refuses to pick one randomly) - You can specify an IP address or an interface name <br/> (in the latter case, Docker will read the IP address of the interface and use it) - You can also specify a port number <br/> (otherwise, the default port 2377 will be used) .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: advertise-addr ## Using a non-default port number - Changing the *advertised* port does not change the *listening* port - If you only pass `--advertise-addr eth0:7777`, Swarm will still listen on port 2377 - You will probably need to pass `--listen-addr eth0:7777` as well - This is to accommodate scenarios where these ports *must* be different <br/> (port mapping, load balancers...) Example to run Swarm on a different port: ```bash docker swarm init --advertise-addr eth0:7777 --listen-addr eth0:7777 ``` .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: advertise-addr ## Which IP address should be advertised? - If your nodes have only one IP address, it's safe to let autodetection do the job .small[(Except if your instances have different private and public addresses, e.g. on EC2, and you are building a Swarm involving nodes inside and outside the private network: then you should advertise the public address.)] - If your nodes have multiple IP addresses, pick an address which is reachable *by every other node* of the Swarm - If you are using [play-with-docker](http://play-with-docker.com/), use the IP address shown next to the node name .small[(This is the address of your node on your private internal overlay network. The other address that you might see is the address of your node on the `docker_gwbridge` network, which is used for outbound traffic.)] Examples: ```bash docker swarm init --advertise-addr 172.24.0.2 docker swarm init --advertise-addr eth0 ``` .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: extra-details ## Using a separate interface for the data path - You can use different interfaces (or IP addresses) for control and data - You set the _control plane path_ with `--advertise-addr` and `--listen-addr` (This will be used for SwarmKit manager/worker communication, leader election, etc.) - You set the _data plane path_ with `--data-path-addr` (This will be used for traffic between containers) - Both flags can accept either an IP address, or an interface name (When specifying an interface name, Docker will use its first IP address) .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- ## Token generation - In the output of `docker swarm init`, we have a message confirming that our node is now the (single) manager: ``` Swarm initialized: current node (8jud...) is now a manager. ``` - Docker generated two security tokens (like passphrases or passwords) for our cluster - The CLI shows us the command to use on other nodes to add them to the cluster using the "worker" security token: ``` To add a worker to this swarm, run the following command: docker swarm join \ --token SWMTKN-1-59fl4ak4nqjmao1ofttrc4eprhrola2l87... \ 172.31.4.182:2377 ``` .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: extra-details ## Checking that Swarm mode is enabled .exercise[ - Run the traditional `docker info` command: ```bash docker info ``` ] The output should include: ``` Swarm: active NodeID: 8jud7o8dax3zxbags3f8yox4b Is Manager: true ClusterID: 2vcw2oa9rjps3a24m91xhvv0c ... ``` .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- ## Running our first Swarm mode command - Let's retry the exact same command as earlier .exercise[ - List the nodes (well, the only node) of our cluster: ```bash docker node ls ``` ] The output should look like the following: ``` ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 8jud...ox4b * node1 Ready Active Leader ``` .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- ## Adding nodes to the Swarm - A cluster with one node is not a lot of fun - Let's add `node2`! - We need the token that was shown earlier -- - You wrote it down, right? -- - Don't panic, we can easily see it again .emoji[😏] .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- ## Adding nodes to the Swarm .exercise[ - Show the token again: ```bash docker swarm join-token worker ``` - Log into `node2`: ```bash ssh node2 ``` - Copy-paste the `docker swarm join ...` command <br/>(that was displayed just before) <!-- ```copypaste docker swarm join --token SWMTKN.*?:2377``` --> ] .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: extra-details ## Check that the node was added correctly - Stay on `node2` for now! .exercise[ - We can still use `docker info` to verify that the node is part of the Swarm: ```bash docker info | grep ^Swarm ``` ] - However, Swarm commands will not work; try, for instance: ```bash docker node ls ``` <!-- Ignore errors: .dummy[```wait not a swarm manager```] --> - This is because the node that we added is currently a *worker* - Only *managers* can accept Swarm-specific commands .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- ## View our two-node cluster - Let's go back to `node1` and see what our cluster looks like .exercise[ - Switch back to `node1` (with `exit`, `Ctrl-D` ...) <!-- ```keys ^D``` --> - View the cluster from `node1`, which is a manager: ```bash docker node ls ``` ] The output should be similar to the following: ``` ID HOSTNAME STATUS AVAILABILITY MANAGER STATUS 8jud...ox4b * node1 Ready Active Leader ehb0...4fvx node2 Ready Active ``` .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: under-the-hood ## Under the hood: docker swarm init When we do `docker swarm init`: - a keypair is created for the root CA of our Swarm - a keypair is created for the first node - a certificate is issued for this node - the join tokens are created .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: under-the-hood ## Under the hood: join tokens There is one token to *join as a worker*, and another to *join as a manager*. The join tokens have two parts: - a secret key (preventing unauthorized nodes from joining) - a fingerprint of the root CA certificate (preventing MITM attacks) If a token is compromised, it can be rotated instantly with: ``` docker swarm join-token --rotate <worker|manager> ``` .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: under-the-hood ## Under the hood: docker swarm join When a node joins the Swarm: - it is issued its own keypair, signed by the root CA - if the node is a manager: - it joins the Raft consensus - it connects to the current leader - it accepts connections from worker nodes - if the node is a worker: - it connects to one of the managers (leader or follower) .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: under-the-hood ## Under the hood: cluster communication - The *control plane* is encrypted with AES-GCM; keys are rotated every 12 hours - Authentication is done with mutual TLS; certificates are rotated every 90 days (`docker swarm update` allows to change this delay or to use an external CA) - The *data plane* (communication between containers) is not encrypted by default (but this can be activated on a by-network basis, using IPSEC, leveraging hardware crypto if available) .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- class: under-the-hood ## Under the hood: I want to know more! Revisit SwarmKit concepts: - Docker 1.12 Swarm Mode Deep Dive Part 1: Topology ([video](https://www.youtube.com/watch?v=dooPhkXT9yI)) - Docker 1.12 Swarm Mode Deep Dive Part 2: Orchestration ([video](https://www.youtube.com/watch?v=_F6PSP-qhdA)) Some presentations from the Docker Distributed Systems Summit in Berlin: - Heart of the SwarmKit: Topology Management ([slides](https://speakerdeck.com/aluzzardi/heart-of-the-swarmkit-topology-management)) - Heart of the SwarmKit: Store, Topology & Object Model ([slides](http://www.slideshare.net/Docker/heart-of-the-swarmkit-store-topology-object-model)) ([video](https://www.youtube.com/watch?v=EmePhjGnCXY)) And DockerCon Black Belt talks: .blackbelt[DC17US: Everything You Thought You Already Knew About Orchestration ([video](https://www.youtube.com/watch?v=Qsv-q8WbIZY&list=PLkA60AVN3hh-biQ6SCtBJ-WVTyBmmYho8&index=6))] .blackbelt[DC17EU: Container Orchestration from Theory to Practice ([video](https://dockercon.docker.com/watch/5fhwnQxW8on1TKxPwwXZ5r))] .debug[[swarm/creatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/creatingswarm.md)] --- ## Adding more manager nodes - Right now, we have only one manager (node1) - If we lose it, we lose quorum - and that's *very bad!* - Containers running on other nodes will be fine ... - But we won't be able to get or set anything related to the cluster - If the manager is permanently gone, we will have to do a manual repair! - Nobody wants to do that ... so let's make our cluster highly available .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- class: self-paced ## Adding more managers With Play-With-Docker: ```bash TOKEN=$(docker swarm join-token -q manager) for N in $(seq 4 5); do export DOCKER_HOST=tcp://node$N:2375 docker swarm join --token $TOKEN node1:2377 done unset DOCKER_HOST ``` .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- class: in-person ## Building our full cluster - We could SSH to nodes 3, 4, 5; and copy-paste the command -- class: in-person - Or we could use the AWESOME POWER OF THE SHELL! -- class: in-person  -- class: in-person - No, not *that* shell .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- class: in-person ## Let's form like Swarm-tron - Let's get the token, and loop over the remaining nodes with SSH .exercise[ - Obtain the manager token: ```bash TOKEN=$(docker swarm join-token -q manager) ``` - Loop over the 3 remaining nodes: ```bash for NODE in node3 node4 node5; do ssh $NODE docker swarm join --token $TOKEN node1:2377 done ``` ] [That was easy.](https://www.youtube.com/watch?v=3YmMNpbFjp0) .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- ## Controlling the Swarm from other nodes .exercise[ - Try the following command on a few different nodes: ```bash docker node ls ``` ] On manager nodes: <br/>you will see the list of nodes, with a `*` denoting the node you're talking to. On non-manager nodes: <br/>you will get an error message telling you that the node is not a manager. As we saw earlier, you can only control the Swarm through a manager node. .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- class: self-paced ## Play-With-Docker node status icon - If you're using Play-With-Docker, you get node status icons - Node status icons are displayed left of the node name - No icon = no Swarm mode detected - Solid blue icon = Swarm manager detected - Blue outline icon = Swarm worker detected  .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- ## Dynamically changing the role of a node - We can change the role of a node on the fly: `docker node promote nodeX` → make nodeX a manager <br/> `docker node demote nodeX` → make nodeX a worker .exercise[ - See the current list of nodes: ``` docker node ls ``` - Promote any worker node to be a manager: ``` docker node promote <node_name_or_id> ``` ] .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- ## How many managers do we need? - 2N+1 nodes can (and will) tolerate N failures <br/>(you can have an even number of managers, but there is no point) -- - 1 manager = no failure - 3 managers = 1 failure - 5 managers = 2 failures (or 1 failure during 1 maintenance) - 7 managers and more = now you might be overdoing it a little bit .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- ## Why not have *all* nodes be managers? - Intuitively, it's harder to reach consensus in larger groups - With Raft, writes have to go to (and be acknowledged by) all nodes - More nodes = more network traffic - Bigger network = more latency .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- ## What would McGyver do? - If some of your machines are more than 10ms away from each other, <br/> try to break them down in multiple clusters (keeping internal latency low) - Groups of up to 9 nodes: all of them are managers - Groups of 10 nodes and up: pick 5 "stable" nodes to be managers <br/> (Cloud pro-tip: use separate auto-scaling groups for managers and workers) - Groups of more than 100 nodes: watch your managers' CPU and RAM - Groups of more than 1000 nodes: - if you can afford to have fast, stable managers, add more of them - otherwise, break down your nodes in multiple clusters .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- ## What's the upper limit? - We don't know! - Internal testing at Docker Inc.: 1000-10000 nodes is fine - deployed to a single cloud region - one of the main take-aways was *"you're gonna need a bigger manager"* - Testing by the community: [4700 heterogenous nodes all over the 'net](https://sematext.com/blog/2016/11/14/docker-swarm-lessons-from-swarm3k/) - it just works - more nodes require more CPU; more containers require more RAM - scheduling of large jobs (70000 containers) is slow, though (working on it!) .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- ## Real-life deployment methods -- Running commands manually over SSH -- (lol jk) -- - Using your favorite configuration management tool - [Docker for AWS](https://docs.docker.com/docker-for-aws/#quickstart) - [Docker for Azure](https://docs.docker.com/docker-for-azure/) .debug[[swarm/morenodes.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/morenodes.md)] --- class: pic .interstitial[] --- name: toc-running-our-first-swarm-service class: title Running our first Swarm service .nav[ [Previous section](#toc-creating-our-first-swarm) | [Back to table of contents](#toc-chapter-2) | [Next section](#toc-our-app-on-swarm) ] .debug[(automatically generated title slide)] --- # Running our first Swarm service - How do we run services? Simplified version: `docker run` → `docker service create` .exercise[ - Create a service featuring an Alpine container pinging Google resolvers: ```bash docker service create --name pingpong alpine ping 8.8.8.8 ``` - Check the result: ```bash docker service ps pingpong ``` ] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: extra-details ## `--detach` for service creation (New in Docker Engine 17.05) If you are running Docker 17.05 to 17.09, you will see the following message: ``` Since --detach=false was not specified, tasks will be created in the background. In a future release, --detach=false will become the default. ``` You can ignore that for now; but we'll come back to it in just a few minutes! .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Checking service logs (New in Docker Engine 17.05) - Just like `docker logs` shows the output of a specific local container ... - ... `docker service logs` shows the output of all the containers of a specific service .exercise[ - Check the output of our ping command: ```bash docker service logs pingpong ``` ] Flags `--follow` and `--tail` are available, as well as a few others. Note: by default, when a container is destroyed (e.g. when scaling down), its logs are lost. .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: extra-details ## Before Docker Engine 17.05 - Docker 1.13/17.03/17.04 have `docker service logs` as an experimental feature <br/>(available only when enabling the experimental feature flag) - We have to use `docker logs`, which only works on local containers - We will have to connect to the node running our container <br/>(unless it was scheduled locally, of course) .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: extra-details ## Looking up where our container is running - The `docker service ps` command told us where our container was scheduled .exercise[ - Look up the `NODE` on which the container is running: ```bash docker service ps pingpong ``` - If you use Play-With-Docker, switch to that node's tab, or set `DOCKER_HOST` - Otherwise, `ssh` into that node or use `$(eval docker-machine env node...)` ] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: extra-details ## Viewing the logs of the container .exercise[ - See that the container is running and check its ID: ```bash docker ps ``` - View its logs: ```bash docker logs containerID ``` <!-- ```wait No such container: containerID``` --> - Go back to `node1` afterwards ] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Scale our service - Services can be scaled in a pinch with the `docker service update` command .exercise[ - Scale the service to ensure 2 copies per node: ```bash docker service update pingpong --replicas 10 ``` - Check that we have two containers on the current node: ```bash docker ps ``` ] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Monitoring deployment progress with `--detach` (New in Docker Engine 17.05) - The CLI can monitor commands that create/update/delete services - `--detach=false` - synchronous operation - the CLI will monitor and display the progress of our request - it exits only when the operation is complete - `--detach=true` - asynchronous operation - the CLI just submits our request - it exits as soon as the request is committed into Raft .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## To `--detach` or not to `--detach` - `--detach=false` - great when experimenting, to see what's going on - also great when orchestrating complex deployments <br/>(when you want to wait for a service to be ready before starting another) - `--detach=true` - great for independent operations that can be parallelized - great in headless scripts (where nobody's watching anyway) .warning[`--detach=true` does not complete *faster*. It just *doesn't wait* for completion.] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: extra-details ## `--detach` over time - Docker Engine 17.10 and later: the default is `--detach=false` - From Docker Engine 17.05 to 17.09: the default is `--detach=true` - Prior to Docker 17.05: `--detach` doesn't exist (You can watch progress with e.g. `watch docker service ps <serviceID>`) .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## `--detach` in action .exercise[ - Scale the service to ensure 3 copies per node: ```bash docker service update pingpong --replicas 15 --detach=false ``` - And then to 4 copies per node: ```bash docker service update pingpong --replicas 20 --detach=true ``` ] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Expose a service - Services can be exposed, with two special properties: - the public port is available on *every node of the Swarm*, - requests coming on the public port are load balanced across all instances. - This is achieved with option `-p/--publish`; as an approximation: `docker run -p → docker service create -p` - If you indicate a single port number, it will be mapped on a port starting at 30000 <br/>(vs. 32768 for single container mapping) - You can indicate two port numbers to set the public port number <br/>(just like with `docker run -p`) .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Expose ElasticSearch on its default port .exercise[ - Create an ElasticSearch service (and give it a name while we're at it): ```bash docker service create --name search --publish 9200:9200 --replicas 7 \ elasticsearch`:2` ``` ] Note: don't forget the **:2**! The latest version of the ElasticSearch image won't start without mandatory configuration. .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Tasks lifecycle - During the deployment, you will be able to see multiple states: - assigned (the task has been assigned to a specific node) - preparing (this mostly means "pulling the image") - starting - running - When a task is terminated (stopped, killed...) it cannot be restarted (A replacement task will be created) .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: extra-details  .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Test our service - We mapped port 9200 on the nodes, to port 9200 in the containers - Let's try to reach that port! .exercise[ <!-- Give it a few seconds to be ready ```bash sleep 5``` --> - Try the following command: ```bash curl localhost:9200 ``` ] (If you get `Connection refused`: congratulations, you are very fast indeed! Just try again.) ElasticSearch serves a little JSON document with some basic information about this instance; including a randomly-generated super-hero name. .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Test the load balancing - If we repeat our `curl` command multiple times, we will see different names .exercise[ - Send 10 requests, and see which instances serve them: ```bash for N in $(seq 1 10); do curl -s localhost:9200 | jq .name done ``` ] Note: if you don't have `jq` on your Play-With-Docker instance, just install it: ``` apk add --no-cache jq ``` .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Load balancing results Traffic is handled by our clusters [TCP routing mesh]( https://docs.docker.com/engine/swarm/ingress/). Each request is served by one of the 7 instances, in rotation. Note: if you try to access the service from your browser, you will probably see the same instance name over and over, because your browser (unlike curl) will try to re-use the same connection. .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Under the hood of the TCP routing mesh - Load balancing is done by IPVS - IPVS is a high-performance, in-kernel load balancer - It's been around for a long time (merged in the kernel since 2.4) - Each node runs a local load balancer (Allowing connections to be routed directly to the destination, without extra hops) .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Managing inbound traffic There are many ways to deal with inbound traffic on a Swarm cluster. - Put all (or a subset) of your nodes in a DNS `A` record - Assign your nodes (or a subset) to an ELB - Use a virtual IP and make sure that it is assigned to an "alive" node - etc. .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: btw-labels ## Managing HTTP traffic - The TCP routing mesh doesn't parse HTTP headers - If you want to place multiple HTTP services on port 80, you need something more - You can set up NGINX or HAProxy on port 80 to do the virtual host switching - Docker Universal Control Plane provides its own [HTTP routing mesh]( https://docs.docker.com/datacenter/ucp/2.1/guides/admin/configure/use-domain-names-to-access-services/) - add a specific label starting with `com.docker.ucp.mesh.http` to your services - labels are detected automatically and dynamically update the configuration .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: btw-labels ## You should use labels - Labels are a great way to attach arbitrary information to services - Examples: - HTTP vhost of a web app or web service - backup schedule for a stateful service - owner of a service (for billing, paging...) - etc. .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Pro-tip for ingress traffic management - It is possible to use *local* networks with Swarm services - This means that you can do something like this: ```bash docker service create --network host --mode global traefik ... ``` (This runs the `traefik` load balancer on each node of your cluster, in the `host` network) - This gives you native performance (no iptables, no proxy, no nothing!) - The load balancer will "see" the clients' IP addresses - But: a container cannot simultaneously be in the `host` network and another network (You will have to route traffic to containers using exposed ports or UNIX sockets) .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: extra-details ## Using local networks (`host`, `macvlan` ...) - It is possible to connect services to local networks - Using the `host` network is fairly straightforward (With the caveats described on the previous slide) - Other network drivers are a bit more complicated (IP allocation may have to be coordinated between nodes) - See for instance [this guide]( https://docs.docker.com/engine/userguide/networking/get-started-macvlan/ ) to get started on `macvlan` - See [this PR](https://github.com/moby/moby/pull/32981) for more information about local network drivers in Swarm mode .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Visualize container placement - Let's leverage the Docker API! .exercise[ - Get the source code of this simple-yet-beautiful visualization app: ```bash cd ~ git clone git://github.com/dockersamples/docker-swarm-visualizer ``` - Build and run the Swarm visualizer: ```bash cd docker-swarm-visualizer docker-compose up -d ``` <!-- ```longwait Creating dockerswarmvisualizer_viz_1``` --> ] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Connect to the visualization webapp - It runs a web server on port 8080 .exercise[ - Point your browser to port 8080 of your node1's public ip (If you use Play-With-Docker, click on the (8080) badge) <!-- ```open http://node1:8080``` --> ] - The webapp updates the display automatically (you don't need to reload the page) - It only shows Swarm services (not standalone containers) - It shows when nodes go down - It has some glitches (it's not Carrier-Grade Enterprise-Compliant ISO-9001 software) .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Why This Is More Important Than You Think - The visualizer accesses the Docker API *from within a container* - This is a common pattern: run container management tools *in containers* - Instead of viewing your cluster, this could take care of logging, metrics, autoscaling ... - We can run it within a service, too! We won't do it, but the command would look like: ```bash docker service create \ --mount source=/var/run/docker.sock,type=bind,target=/var/run/docker.sock \ --name viz --constraint node.role==manager ... ``` Credits: the visualization code was written by [Francisco Miranda](https://github.com/maroshii). <br/> [Mano Marks](https://twitter.com/manomarks) adapted it to Swarm and maintains it. .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- ## Terminate our services - Before moving on, we will remove those services - `docker service rm` can accept multiple services names or IDs - `docker service ls` can accept the `-q` flag - A Shell snippet a day keeps the cruft away .exercise[ - Remove all services with this one liner: ```bash docker service ls -q | xargs docker service rm ``` ] .debug[[swarm/firstservice.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/firstservice.md)] --- class: pic .interstitial[] --- name: toc-our-app-on-swarm class: title Our app on Swarm .nav[ [Previous section](#toc-running-our-first-swarm-service) | [Back to table of contents](#toc-chapter-2) | [Next section](#toc-hosting-our-own-registry) ] .debug[(automatically generated title slide)] --- # Our app on Swarm In this part, we will: - **build** images for our app, - **ship** these images with a registry, - **run** services using these images. .debug[[swarm/ourapponswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ourapponswarm.md)] --- ## Why do we need to ship our images? - When we do `docker-compose up`, images are built for our services - These images are present only on the local node - We need these images to be distributed on the whole Swarm - The easiest way to achieve that is to use a Docker registry - Once our images are on a registry, we can reference them when creating our services .debug[[swarm/ourapponswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ourapponswarm.md)] --- class: extra-details ## Build, ship, and run, for a single service If we had only one service (built from a `Dockerfile` in the current directory), our workflow could look like this: ``` docker build -t jpetazzo/doublerainbow:v0.1 . docker push jpetazzo/doublerainbow:v0.1 docker service create jpetazzo/doublerainbow:v0.1 ``` We just have to adapt this to our application, which has 4 services! .debug[[swarm/ourapponswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ourapponswarm.md)] --- ## The plan - Build on our local node (`node1`) - Tag images so that they are named `localhost:5000/servicename` - Upload them to a registry - Create services using the images .debug[[swarm/ourapponswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ourapponswarm.md)] --- ## Which registry do we want to use? .small[ - **Docker Hub** - hosted by Docker Inc. - requires an account (free, no credit card needed) - images will be public (unless you pay) - located in AWS EC2 us-east-1 - **Docker Trusted Registry** - self-hosted commercial product - requires a subscription (free 30-day trial available) - images can be public or private - located wherever you want - **Docker open source registry** - self-hosted barebones repository hosting - doesn't require anything - doesn't come with anything either - located wherever you want ] .debug[[swarm/ourapponswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ourapponswarm.md)] --- class: extra-details ## Using Docker Hub *If we wanted to use the Docker Hub...* - We would log into the Docker Hub: ```bash docker login ``` - And in the following slides, we would use our Docker Hub login (e.g. `jpetazzo`) instead of the registry address (i.e. `127.0.0.1:5000`) .debug[[swarm/ourapponswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ourapponswarm.md)] --- class: extra-details ## Using Docker Trusted Registry *If we wanted to use DTR, we would...* - Make sure we have a Docker Hub account - [Activate a Docker Datacenter subscription]( https://hub.docker.com/enterprise/trial/) - Install DTR on our machines - Use `dtraddress:port/user` instead of the registry address *This is out of the scope of this workshop!* .debug[[swarm/ourapponswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ourapponswarm.md)] --- class: pic .interstitial[] --- name: toc-hosting-our-own-registry class: title Hosting our own registry .nav[ [Previous section](#toc-our-app-on-swarm) | [Back to table of contents](#toc-chapter-2) | [Next section](#toc-global-scheduling) ] .debug[(automatically generated title slide)] --- # Hosting our own registry - We need to run a `registry:2` container <br/>(make sure you specify tag `:2` to run the new version!) - It will store images and layers to the local filesystem <br/>(but you can add a config file to use S3, Swift, etc.) - Docker *requires* TLS when communicating with the registry - unless for registries on `127.0.0.0/8` (i.e. `localhost`) - or with the Engine flag `--insecure-registry` <!-- --> - Our strategy: publish the registry container on port 5000, <br/>so that it's available through `127.0.0.1:5000` on each node .debug[[swarm/hostingregistry.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/hostingregistry.md)] --- ## Deploying the registry - We will create a single-instance service, publishing its port on the whole cluster .exercise[ - Create the registry service: ```bash docker service create --name registry --publish 5000:5000 registry:2 ``` - Now try the following command; it should return `{"repositories":[]}`: ```bash curl 127.0.0.1:5000/v2/_catalog ``` ] .debug[[swarm/hostingregistry.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/hostingregistry.md)] --- ## Testing our local registry - We can retag a small image, and push it to the registry .exercise[ - Make sure we have the busybox image, and retag it: ```bash docker pull busybox docker tag busybox 127.0.0.1:5000/busybox ``` - Push it: ```bash docker push 127.0.0.1:5000/busybox ``` ] .debug[[swarm/testingregistry.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/testingregistry.md)] --- ## Checking what's on our local registry - The registry API has endpoints to query what's there .exercise[ - Ensure that our busybox image is now in the local registry: ```bash curl http://127.0.0.1:5000/v2/_catalog ``` ] The curl command should now output: ```json {"repositories":["busybox"]} ``` .debug[[swarm/testingregistry.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/testingregistry.md)] --- ## Build, tag, and push our container images - Compose has named our images `dockercoins_XXX` for each service - We need to retag them (to `127.0.0.1:5000/XXX:v1`) and push them .exercise[ - Set `REGISTRY` and `TAG` environment variables to use our local registry - And run this little for loop: ```bash cd ~/container.training/dockercoins REGISTRY=127.0.0.1:5000 TAG=v1 for SERVICE in hasher rng webui worker; do docker tag dockercoins_$SERVICE $REGISTRY/$SERVICE:$TAG docker push $REGISTRY/$SERVICE done ``` ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Overlay networks - SwarmKit integrates with overlay networks - Networks are created with `docker network create` - Make sure to specify that you want an *overlay* network <br/>(otherwise you will get a local *bridge* network by default) .exercise[ - Create an overlay network for our application: ```bash docker network create --driver overlay dockercoins ``` ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Viewing existing networks - Let's confirm that our network was created .exercise[ - List existing networks: ```bash docker network ls ``` ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Can you spot the differences? The networks `dockercoins` and `ingress` are different from the other ones. Can you see how? -- - They are using a different kind of ID, reflecting the fact that they are SwarmKit objects instead of "classic" Docker Engine objects. - Their *scope* is `swarm` instead of `local`. - They are using the overlay driver. .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- class: extra-details ## Caveats .warning[In Docker 1.12, you cannot join an overlay network with `docker run --net ...`.] Starting with version 1.13, you can, if the network was created with the `--attachable` flag. *Why is that?* Placing a container on a network requires allocating an IP address for this container. The allocation must be done by a manager node (worker nodes cannot update Raft data). As a result, `docker run --net ...` requires collaboration with manager nodes. It alters the code path for `docker run`, so it is allowed only under strict circumstances. .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Run the application - First, create the `redis` service; that one is using a Docker Hub image .exercise[ - Create the `redis` service: ```bash docker service create --network dockercoins --name redis redis ``` ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Run the other services - Then, start the other services one by one - We will use the images pushed previously .exercise[ - Start the other services: ```bash REGISTRY=127.0.0.1:5000 TAG=v1 for SERVICE in hasher rng webui worker; do docker service create --network dockercoins --detach=true \ --name $SERVICE $REGISTRY/$SERVICE:$TAG done ``` ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Expose our application web UI - We need to connect to the `webui` service, but it is not publishing any port - Let's reconfigure it to publish a port .exercise[ - Update `webui` so that we can connect to it from outside: ```bash docker service update webui --publish-add 8000:80 --detach=false ``` ] Note: to "de-publish" a port, you would have to specify the container port. </br>(i.e. in that case, `--publish-rm 80`) .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## What happens when we modify a service? - Let's find out what happened to our `webui` service .exercise[ - Look at the tasks and containers associated to `webui`: ```bash docker service ps webui ``` ] -- The first version of the service (the one that was not exposed) has been shutdown. It has been replaced by the new version, with port 80 accessible from outside. (This will be discussed with more details in the section about stateful services.) .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Connect to the web UI - The web UI is now available on port 8000, *on all the nodes of the cluster* .exercise[ - If you're using Play-With-Docker, just click on the `(8000)` badge - Otherwise, point your browser to any node, on port 8000 ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Scaling the application - We can change scaling parameters with `docker update` as well - We will do the equivalent of `docker-compose scale` .exercise[ - Bring up more workers: ```bash docker service update worker --replicas 10 --detach=false ``` - Check the result in the web UI ] You should see the performance peaking at 10 hashes/s (like before). .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- class: pic .interstitial[] --- name: toc-global-scheduling class: title Global scheduling .nav[ [Previous section](#toc-hosting-our-own-registry) | [Back to table of contents](#toc-chapter-2) | [Next section](#toc-integration-with-compose) ] .debug[(automatically generated title slide)] --- # Global scheduling - We want to utilize as best as we can the entropy generators on our nodes - We want to run exactly one `rng` instance per node - SwarmKit has a special scheduling mode for that, let's use it - We cannot enable/disable global scheduling on an existing service - We have to destroy and re-create the `rng` service .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Scaling the `rng` service .exercise[ - Remove the existing `rng` service: ```bash docker service rm rng ``` - Re-create the `rng` service with *global scheduling*: ```bash docker service create --name rng --network dockercoins --mode global \ --detach=false $REGISTRY/rng:$TAG ``` - Look at the result in the web UI ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- class: extra-details ## Why do we have to re-create the service? - State reconciliation is handled by a *controller* - The controller knows how to "converge" a scaled service spec to another - It doesn't know how to "transform" a scaled service into a global one <br/> (or vice versa) - This might change in the future (after all, it was possible in 1.12 RC!) - As of Docker Engine 17.05, other parameters requiring to `rm`/`create` the service are: - service name - hostname - network .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## Removing everything - Before moving on, let's get a clean slate .exercise[ - Remove *all* the services: ```bash docker service ls -q | xargs docker service rm ``` ] .debug[[swarm/btp-manual.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/btp-manual.md)] --- ## How did we make our app "Swarm-ready"? This app was written in June 2015. (One year before Swarm mode was released.) What did we change to make it compatible with Swarm mode? -- .exercise[ - Go to the app directory: ```bash cd ~/container.training/dockercoins ``` - See modifications in the code: ```bash git log -p --since "4-JUL-2015" -- . ':!*.yml*' ':!*.html' ``` <!-- ```wait commit``` --> <!-- ```keys q``` --> ] .debug[[swarm/swarmready.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmready.md)] --- ## Which files have been changed since then? - Compose files - HTML file (it contains an embedded contextual tweet) - Dockerfiles (to switch to smaller images) - That's it! -- *We didn't change a single line of code in this app since it was written.* -- *The images that were [built in June 2015]( https://hub.docker.com/r/jpetazzo/dockercoins_worker/tags/) (when the app was written) can still run today ... <br/>... in Swarm mode (distributed across a cluster, with load balancing) ... <br/>... without any modification.* .debug[[swarm/swarmready.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmready.md)] --- ## How did we design our app in the first place? - [Twelve-Factor App](https://12factor.net/) principles - Service discovery using DNS names - Initially implemented as "links" - Then "ambassadors" - And now "services" - Existing apps might require more changes! .debug[[swarm/swarmready.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmready.md)] --- class: btp-manual ## Integration with Compose - We saw how to manually build, tag, and push images to a registry - But ... -- class: btp-manual *"I'm so glad that my deployment relies on ten nautic miles of Shell scripts"* *(No-one, ever)* -- class: btp-manual - Let's see how we can streamline this process! .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- class: pic .interstitial[] --- name: toc-integration-with-compose class: title Integration with Compose .nav[ [Previous section](#toc-global-scheduling) | [Back to table of contents](#toc-chapter-2) | [Next section](#toc-securing-overlay-networks) ] .debug[(automatically generated title slide)] --- # Integration with Compose - Compose is great for local development - It can also be used to manage image lifecycle (i.e. build images and push them to a registry) - Compose files *v2* are great for local development - Compose files *v3* can also be used for production deployments! .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Compose file version 3 (New in Docker Engine 1.13) - Almost identical to version 2 - Can be directly used by a Swarm cluster through `docker stack ...` commands - Introduces a `deploy` section to pass Swarm-specific parameters - Resource limits are moved to this `deploy` section - See [here](https://github.com/aanand/docker.github.io/blob/8524552f99e5b58452fcb1403e1c273385988b71/compose/compose-file.md#upgrading) for the complete list of changes - Supersedes *Distributed Application Bundles* (JSON payload describing an application; could be generated from a Compose file) .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Our first stack We need a registry to move images around. Without a stack file, it would be deployed with the following command: ```bash docker service create --publish 5000:5000 registry:2 ``` Now, we are going to deploy it with the following stack file: ```yaml version: "3" services: registry: image: registry:2 ports: - "5000:5000" ``` .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Checking our stack files - All the stack files that we will use are in the `stacks` directory .exercise[ - Go to the `stacks` directory: ```bash cd ~/container.training/stacks ``` - Check `registry.yml`: ```bash cat registry.yml ``` ] .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Deploying our first stack - All stack manipulation commands start with `docker stack` - Under the hood, they map to `docker service` commands - Stacks have a *name* (which also serves as a namespace) - Stacks are specified with the aforementioned Compose file format version 3 .exercise[ - Deploy our local registry: ```bash docker stack deploy registry --compose-file registry.yml ``` ] .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Inspecting stacks - `docker stack ps` shows the detailed state of all services of a stack .exercise[ - Check that our registry is running correctly: ```bash docker stack ps registry ``` - Confirm that we get the same output with the following command: ```bash docker service ps registry_registry ``` ] .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- class: btp-manual ## Specifics of stack deployment Our registry is not *exactly* identical to the one deployed with `docker service create`! - Each stack gets its own overlay network - Services of the task are connected to this network <br/>(unless specified differently in the Compose file) - Services get network aliases matching their name in the Compose file <br/>(just like when Compose brings up an app specified in a v2 file) - Services are explicitly named `<stack_name>_<service_name>` - Services and tasks also get an internal label indicating which stack they belong to .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- class: btp-auto ## Testing our local registry - Connecting to port 5000 *on any node of the cluster* routes us to the registry - Therefore, we can use `localhost:5000` or `127.0.0.1:5000` as our registry .exercise[ - Issue the following API request to the registry: ```bash curl 127.0.0.1:5000/v2/_catalog ``` ] It should return: ```json {"repositories":[]} ``` If that doesn't work, retry a few times; perhaps the container is still starting. .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- class: btp-auto ## Pushing an image to our local registry - We can retag a small image, and push it to the registry .exercise[ - Make sure we have the busybox image, and retag it: ```bash docker pull busybox docker tag busybox 127.0.0.1:5000/busybox ``` - Push it: ```bash docker push 127.0.0.1:5000/busybox ``` ] .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- class: btp-auto ## Checking what's on our local registry - The registry API has endpoints to query what's there .exercise[ - Ensure that our busybox image is now in the local registry: ```bash curl http://127.0.0.1:5000/v2/_catalog ``` ] The curl command should now output: ```json "repositories":["busybox"]} ``` .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Building and pushing stack services - When using Compose file version 2 and above, you can specify *both* `build` and `image` - When both keys are present: - Compose does "business as usual" (uses `build`) - but the resulting image is named as indicated by the `image` key <br/> (instead of `<projectname>_<servicename>:latest`) - it can be pushed to a registry with `docker-compose push` - Example: ```yaml webfront: build: www image: myregistry.company.net:5000/webfront ``` .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Using Compose to build and push images .exercise[ - Try it: ```bash docker-compose -f dockercoins.yml build docker-compose -f dockercoins.yml push ``` ] Let's have a look at the `dockercoins.yml` file while this is building and pushing. .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ```yaml version: "3" services: rng: build: dockercoins/rng image: ${REGISTRY-127.0.0.1:5000}/rng:${TAG-latest} deploy: mode: global ... redis: image: redis ... worker: build: dockercoins/worker image: ${REGISTRY-127.0.0.1:5000}/worker:${TAG-latest} ... deploy: replicas: 10 ``` .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Deploying the application - Now that the images are on the registry, we can deploy our application stack .exercise[ - Create the application stack: ```bash docker stack deploy dockercoins --compose-file dockercoins.yml ``` ] We can now connect to any of our nodes on port 8000, and we will see the familiar hashing speed graph. .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Maintaining multiple environments There are many ways to handle variations between environments. - Compose loads `docker-compose.yml` and (if it exists) `docker-compose.override.yml` - Compose can load alternate file(s) by setting the `-f` flag or the `COMPOSE_FILE` environment variable - Compose files can *extend* other Compose files, selectively including services: ```yaml web: extends: file: common-services.yml service: webapp ``` See [this documentation page](https://docs.docker.com/compose/extends/) for more details about these techniques. .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- class: extra-details ## Good to know ... - Compose file version 3 adds the `deploy` section - Further versions (3.1, ...) add more features (secrets, configs ...) - You can re-run `docker stack deploy` to update a stack - You can make manual changes with `docker service update` ... - ... But they will be wiped out each time you `docker stack deploy` (That's the intended behavior, when one thinks about it!) - `extends` doesn't work with `docker stack deploy` (But you can use `docker-compose config` to "flatten" your configuration) .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- ## Summary - We've seen how to set up a Swarm - We've used it to host our own registry - We've built our app container images - We've used the registry to host those images - We've deployed and scaled our application - We've seen how to use Compose to streamline deployments - Awesome job, team! .debug[[swarm/compose2swarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/compose2swarm.md)] --- class: self-paced ## Before we continue ... The following exercises assume that you have a 5-nodes Swarm cluster. If you come here from a previous tutorial and still have your cluster: great! Otherwise: check [part 1](#part-1) to learn how to set up your own cluster. We pick up exactly where we left you, so we assume that you have: - a five nodes Swarm cluster, - a self-hosted registry, - DockerCoins up and running. The next slide has a cheat sheet if you need to set that up in a pinch. .debug[[swarm/operatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/operatingswarm.md)] --- class: self-paced ## Catching up Assuming you have 5 nodes provided by [Play-With-Docker](http://www.play-with-docker/), do this from `node1`: ```bash docker swarm init --advertise-addr eth0 TOKEN=$(docker swarm join-token -q manager) for N in $(seq 2 5); do DOCKER_HOST=tcp://node$N:2375 docker swarm join --token $TOKEN node1:2377 done git clone git://github.com/jpetazzo/container.training cd container.training/stacks docker stack deploy --compose-file registry.yml registry docker-compose -f dockercoins.yml build docker-compose -f dockercoins.yml push docker stack deploy --compose-file dockercoins.yml dockercoins ``` You should now be able to connect to port 8000 and see the DockerCoins web UI. .debug[[swarm/operatingswarm.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/operatingswarm.md)] --- class: pic .interstitial[] --- name: toc-securing-overlay-networks class: title Securing overlay networks .nav[ [Previous section](#toc-integration-with-compose) | [Back to table of contents](#toc-chapter-3) | [Next section](#toc-updating-services) ] .debug[(automatically generated title slide)] --- # Securing overlay networks - By default, overlay networks are using plain VXLAN encapsulation (~Ethernet over UDP, using SwarmKit's control plane for ARP resolution) - Encryption can be enabled on a per-network basis (It will use IPSEC encryption provided by the kernel, leveraging hardware acceleration) - This is only for the `overlay` driver (Other drivers/plugins will use different mechanisms) .debug[[swarm/ipsec.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ipsec.md)] --- ## Creating two networks: encrypted and not - Let's create two networks for testing purposes .exercise[ - Create an "insecure" network: ```bash docker network create insecure --driver overlay --attachable ``` - Create a "secure" network: ```bash docker network create secure --opt encrypted --driver overlay --attachable ``` ] .warning[Make sure that you don't typo that option; errors are silently ignored!] .debug[[swarm/ipsec.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ipsec.md)] --- ## Deploying a dual-homed web server - Let's use good old NGINX - We will attach it to both networks - We will use a placement constraint to make sure that it is on a different node .exercise[ - Create a web server running somewhere else: ```bash docker service create --name web \ --network secure --network insecure \ --constraint node.hostname!=node1 \ nginx ``` ] .debug[[swarm/ipsec.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ipsec.md)] --- ## Sniff HTTP traffic - We will use `ngrep`, which allows to grep for network traffic - We will run it in a container, using host networking to access the host's interfaces .exercise[ - Sniff network traffic and display all packets containing "HTTP": ```bash docker run --net host nicolaka/netshoot ngrep -tpd eth0 HTTP ``` <!-- ```wait User-Agent``` --> <!-- ```keys ^C``` --> ] -- Seeing tons of HTTP request? Shutdown your DockerCoins workers: ```bash docker service update dockercoins_worker --replicas=0 ``` .debug[[swarm/ipsec.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ipsec.md)] --- ## Check that we are, indeed, sniffing traffic - Let's see if we can intercept our traffic with Google! .exercise[ - Open a new terminal - Issue an HTTP request to Google (or anything you like): ```bash curl google.com ``` ] The ngrep container will display one `#` per packet traversing the network interface. When you do the `curl`, you should see the HTTP request in clear text in the output. .debug[[swarm/ipsec.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ipsec.md)] --- class: extra-details ## If you are using Play-With-Docker, Vagrant, etc. - You will probably have *two* network interfaces - One interface will be used for outbound traffic (to Google) - The other one will be used for internode traffic - You might have to adapt/relaunch the `ngrep` command to specify the right one! .debug[[swarm/ipsec.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ipsec.md)] --- ## Try to sniff traffic across overlay networks - We will run `curl web` through both secure and insecure networks .exercise[ - Access the web server through the insecure network: ```bash docker run --rm --net insecure nicolaka/netshoot curl web ``` - Now do the same through the secure network: ```bash docker run --rm --net secure nicolaka/netshoot curl web ``` ] When you run the first command, you will see HTTP fragments. <br/> However, when you run the second one, only `#` will show up. .debug[[swarm/ipsec.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/ipsec.md)] --- class: pic .interstitial[] --- name: toc-updating-services class: title Updating services .nav[ [Previous section](#toc-securing-overlay-networks) | [Back to table of contents](#toc-chapter-3) | [Next section](#toc-rolling-updates) ] .debug[(automatically generated title slide)] --- # Updating services - We want to make changes to the web UI - The process is as follows: - edit code - build new image - ship new image - run new image .debug[[swarm/updatingservices.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/updatingservices.md)] --- ## Updating a single service the hard way - To update a single service, we could do the following: ```bash REGISTRY=localhost:5000 TAG=v0.3 IMAGE=$REGISTRY/dockercoins_webui:$TAG docker build -t $IMAGE webui/ docker push $IMAGE docker service update dockercoins_webui --image $IMAGE ``` - Make sure to tag properly your images: update the `TAG` at each iteration (When you check which images are running, you want these tags to be uniquely identifiable) .debug[[swarm/updatingservices.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/updatingservices.md)] --- ## Updating services the easy way - With the Compose integration, all we have to do is: ```bash export TAG=v0.3 docker-compose -f composefile.yml build docker-compose -f composefile.yml push docker stack deploy -c composefile.yml nameofstack ``` -- - That's exactly what we used earlier to deploy the app - We don't need to learn new commands! .debug[[swarm/updatingservices.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/updatingservices.md)] --- ## Changing the code - Let's make the numbers on the Y axis bigger! .exercise[ - Edit the file `webui/files/index.html`: ```bash vi dockercoins/webui/files/index.html ``` <!-- ```wait <title>``` --> - Locate the `font-size` CSS attribute and increase it (at least double it) <!-- ```keys /font-size``` ```keys ^J``` ```keys lllllllllllllcw45px``` ```keys ^[``` ] ```keys :wq``` ```keys ^J``` --> - Save and exit ] .debug[[swarm/updatingservices.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/updatingservices.md)] --- ## Build, ship, and run our changes - Four steps: 1. Set (and export!) the `TAG` environment variable 2. `docker-compose build` 3. `docker-compose push` 4. `docker stack deploy` .exercise[ - Build, ship, and run: ```bash export TAG=v0.3 docker-compose -f dockercoins.yml build docker-compose -f dockercoins.yml push docker stack deploy -c dockercoins.yml dockercoins ``` ] .debug[[swarm/updatingservices.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/updatingservices.md)] --- ## Viewing our changes - Wait at least 10 seconds (for the new version to be deployed) - Then reload the web UI - Or just mash "reload" frantically - ... Eventually the legend on the left will be bigger! .debug[[swarm/updatingservices.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/updatingservices.md)] --- class: pic .interstitial[] --- name: toc-rolling-updates class: title Rolling updates .nav[ [Previous section](#toc-updating-services) | [Back to table of contents](#toc-chapter-3) | [Next section](#toc-swarmkit-debugging-tools) ] .debug[(automatically generated title slide)] --- # Rolling updates - Let's change a scaled service: `worker` .exercise[ - Edit `worker/worker.py` - Locate the `sleep` instruction and change the delay - Build, ship, and run our changes: ```bash export TAG=v0.4 docker-compose -f dockercoins.yml build docker-compose -f dockercoins.yml push docker stack deploy -c dockercoins.yml dockercoins ``` ] .debug[[swarm/rollingupdates.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/rollingupdates.md)] --- ## Viewing our update as it rolls out .exercise[ - Check the status of the `dockercoins_worker` service: ```bash watch docker service ps dockercoins_worker ``` <!-- ```wait dockercoins_worker.1``` --> <!-- ```keys ^C``` --> - Hide the tasks that are shutdown: ```bash watch -n1 "docker service ps dockercoins_worker | grep -v Shutdown.*Shutdown" ``` <!-- ```wait dockercoins_worker.1``` --> <!-- ```keys ^C``` --> ] If you had stopped the workers earlier, this will automatically restart them. By default, SwarmKit does a rolling upgrade, one instance at a time. We should therefore see the workers being updated one my one. .debug[[swarm/rollingupdates.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/rollingupdates.md)] --- ## Changing the upgrade policy - We can set upgrade parallelism (how many instances to update at the same time) - And upgrade delay (how long to wait between two batches of instances) .exercise[ - Change the parallelism to 2 and the delay to 5 seconds: ```bash docker service update dockercoins_worker \ --update-parallelism 2 --update-delay 5s ``` ] The current upgrade will continue at a faster pace. .debug[[swarm/rollingupdates.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/rollingupdates.md)] --- ## Changing the policy in the Compose file - The policy can also be updated in the Compose file - This is done by adding an `update_config` key under the `deploy` key: ```yaml deploy: replicas: 10 update_config: parallelism: 2 delay: 10s ``` .debug[[swarm/rollingupdates.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/rollingupdates.md)] --- ## Rolling back - At any time (e.g. before the upgrade is complete), we can rollback: - by editing the Compose file and redeploying; - or with the special `--rollback` flag .exercise[ - Try to rollback the service: ```bash docker service update dockercoins_worker --rollback ``` ] What happens with the web UI graph? .debug[[swarm/rollingupdates.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/rollingupdates.md)] --- ## The fine print with rollback - Rollback reverts to the previous service definition - If we visualize successive updates as a stack: - it doesn't "pop" the latest update - it "pushes" a copy of the previous update on top - ergo, rolling back twice does nothing - "Service definition" includes rollout cadence - Each `docker service update` command = a new service definition .debug[[swarm/rollingupdates.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/rollingupdates.md)] --- class: extra-details ## Timeline of an upgrade - SwarmKit will upgrade N instances at a time <br/>(following the `update-parallelism` parameter) - New tasks are created, and their desired state is set to `Ready` <br/>.small[(this pulls the image if necessary, ensures resource availability, creates the container ... without starting it)] - If the new tasks fail to get to `Ready` state, go back to the previous step <br/>.small[(SwarmKit will try again and again, until the situation is addressed or desired state is updated)] - When the new tasks are `Ready`, it sets the old tasks desired state to `Shutdown` - When the old tasks are `Shutdown`, it starts the new tasks - Then it waits for the `update-delay`, and continues with the next batch of instances .debug[[swarm/rollingupdates.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/rollingupdates.md)] --- ## Getting task information for a given node - You can see all the tasks assigned to a node with `docker node ps` - It shows the *desired state* and *current state* of each task - `docker node ps` shows info about the current node - `docker node ps <node_name_or_id>` shows info for another node - `docker node ps -f <filter_expression>` allows to select which tasks to show ```bash # Show only tasks that are supposed to be running docker node ps -f desired-state=running # Show only tasks whose name contains the string "front" docker node ps -f name=front ``` .debug[[swarm/nodeinfo.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/nodeinfo.md)] --- class: pic .interstitial[] --- name: toc-swarmkit-debugging-tools class: title SwarmKit debugging tools .nav[ [Previous section](#toc-rolling-updates) | [Back to table of contents](#toc-chapter-3) | [Next section](#toc-secrets-management-and-encryption-at-rest) ] .debug[(automatically generated title slide)] --- # SwarmKit debugging tools - The SwarmKit repository comes with debugging tools - They are *low level* tools; not for general use - We are going to see two of these tools: - `swarmctl`, to communicate directly with the SwarmKit API - `swarm-rafttool`, to inspect the content of the Raft log .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## Building the SwarmKit tools - We are going to install a Go compiler, then download SwarmKit source and build it .exercise[ - Download, compile, and install SwarmKit with this one-liner: ```bash docker run -v /usr/local/bin:/go/bin golang \ go get `-v` github.com/docker/swarmkit/... ``` ] Remove `-v` if you don't like verbose things. Shameless promo: for more Go and Docker love, check [this blog post](http://jpetazzo.github.io/2016/09/09/go-docker/)! Note: in the unfortunate event of SwarmKit *master* branch being broken, the build might fail. In that case, just skip the Swarm tools section. .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## Getting cluster-wide task information - The Docker API doesn't expose this directly (yet) - But the SwarmKit API does - We are going to query it with `swarmctl` - `swarmctl` is an example program showing how to interact with the SwarmKit API .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## Using `swarmctl` - The Docker Engine places the SwarmKit control socket in a special path - You need root privileges to access it .exercise[ - If you are using Play-With-Docker, set the following alias: ```bash alias swarmctl='/lib/ld-musl-x86_64.so.1 /usr/local/bin/swarmctl \ --socket /var/run/docker/swarm/control.sock' ``` - Otherwise, set the following alias: ```bash alias swarmctl='sudo swarmctl \ --socket /var/run/docker/swarm/control.sock' ``` ] .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## `swarmctl` in action - Let's review a few useful `swarmctl` commands .exercise[ - List cluster nodes (that's equivalent to `docker node ls`): ```bash swarmctl node ls ``` - View all tasks across all services: ```bash swarmctl task ls ``` ] .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## `swarmctl` notes - SwarmKit is vendored into the Docker Engine - If you want to use `swarmctl`, you need the exact version of SwarmKit that was used in your Docker Engine - Otherwise, you might get some errors like: ``` Error: grpc: failed to unmarshal the received message proto: wrong wireType = 0 ``` - With Docker 1.12, the control socket was in `/var/lib/docker/swarm/control.sock` .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## `swarm-rafttool` - SwarmKit stores all its important data in a distributed log using the Raft protocol (This log is also simply called the "Raft log") - You can decode that log with `swarm-rafttool` - This is a great tool to understand how SwarmKit works - It can also be used in forensics or troubleshooting (But consider it as a *very low level* tool!) .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## The powers of `swarm-rafttool` With `swarm-rafttool`, you can: - view the latest snapshot of the cluster state; - view the Raft log (i.e. changes to the cluster state); - view specific objects from the log or snapshot; - decrypt the Raft data (to analyze it with other tools). It *cannot* work on live files, so you must stop Docker or make a copy first. .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## Using `swarm-rafttool` - First, let's make a copy of the current Swarm data .exercise[ - If you are using Play-With-Docker, the Docker data directory is `/graph`: ```bash cp -r /graph/swarm /swarmdata ``` <!-- ```wait cp: cannot stat``` --> - Otherwise, it is in the default `/var/lib/docker`: ```bash sudo cp -r /var/lib/docker/swarm /swarmdata ``` ] .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- ## Dumping the Raft log - We have to indicate the path holding the Swarm data (Otherwise `swarm-rafttool` will try to use the live data, and complain that it's locked!) .exercise[ - If you are using Play-With-Docker, you must use the musl linker: ```bash /lib/ld-musl-x86_64.so.1 /usr/local/bin/swarm-rafttool -d /swarmdata/ dump-wal ``` <!-- ```wait -bash:``` --> - Otherwise, you don't need the musl linker but you need to get root: ```bash sudo swarm-rafttool -d /swarmdata/ dump-wal ``` ] Reminder: this is a very low-level tool, requiring a knowledge of SwarmKit's internals! .debug[[swarm/swarmtools.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/swarmtools.md)] --- class: pic .interstitial[] --- name: toc-secrets-management-and-encryption-at-rest class: title Secrets management and encryption at rest .nav[ [Previous section](#toc-swarmkit-debugging-tools) | [Back to table of contents](#toc-chapter-4) | [Next section](#toc-least-privilege-model) ] .debug[(automatically generated title slide)] --- # Secrets management and encryption at rest (New in Docker Engine 1.13) - Secrets management = selectively and securely bring secrets to services - Encryption at rest = protect against storage theft or prying - Remember: - control plane is authenticated through mutual TLS, certs rotated every 90 days - control plane is encrypted with AES-GCM, keys rotated every 12 hours - data plane is not encrypted by default (for performance reasons), <br/>but we saw earlier how to enable that with a single flag .debug[[swarm/security.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/security.md)] --- class: secrets ## Secret management - Docker has a "secret safe" (secure key→value store) - You can create as many secrets as you like - You can associate secrets to services - Secrets are exposed as plain text files, but kept in memory only (using `tmpfs`) - Secrets are immutable (at least in Engine 1.13) - Secrets have a max size of 500 KB .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Creating secrets - Must specify a name for the secret; and the secret itself .exercise[ - Assign [one of the four most commonly used passwords](https://www.youtube.com/watch?v=0Jx8Eay5fWQ) to a secret called `hackme`: ```bash echo love | docker secret create hackme - ``` ] If the secret is in a file, you can simply pass the path to the file. (The special path `-` indicates to read from the standard input.) .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Creating better secrets - Picking lousy passwords always leads to security breaches .exercise[ - Let's craft a better password, and assign it to another secret: ```bash base64 /dev/urandom | head -c16 | docker secret create arewesecureyet - ``` ] Note: in the latter case, we don't even know the secret at this point. But Swarm does. .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Using secrets - Secrets must be handed explicitly to services .exercise[ - Create a dummy service with both secrets: ```bash docker service create \ --secret hackme --secret arewesecureyet \ --name dummyservice \ --constraint node.hostname==$HOSTNAME \ alpine sleep 1000000000 ``` ] We constrain the container to be on the local node for convenience. <br/> (We are going to use `docker exec` in just a moment!) .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Accessing secrets - Secrets are materialized on `/run/secrets` (which is an in-memory filesystem) .exercise[ - Find the ID of the container for the dummy service: ```bash CID=$(docker ps -q --filter label=com.docker.swarm.service.name=dummyservice) ``` - Enter the container: ```bash docker exec -ti $CID sh ``` - Check the files in `/run/secrets` <!-- ```bash grep . /run/secrets/*``` --> <!-- ```bash exit``` --> ] .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Rotating secrets - You can't change a secret (Sounds annoying at first; but allows clean rollbacks if a secret update goes wrong) - You can add a secret to a service with `docker service update --secret-add` (This will redeploy the service; it won't add the secret on the fly) - You can remove a secret with `docker service update --secret-rm` - Secrets can be mapped to different names by expressing them with a micro-format: ```bash docker service create --secret source=secretname,target=filename ``` .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Changing our insecure password - We want to replace our `hackme` secret with a better one .exercise[ - Remove the insecure `hackme` secret: ```bash docker service update dummyservice --secret-rm hackme ``` - Add our better secret instead: ```bash docker service update dummyservice \ --secret-add source=arewesecureyet,target=hackme ``` ] Wait for the service to be fully updated with e.g. `watch docker service ps dummyservice`. <br/>(With Docker Engine 17.10 and later, the CLI will wait for you!) .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Checking that our password is now stronger - We will use the power of `docker exec`! .exercise[ - Get the ID of the new container: ```bash CID=$(docker ps -q --filter label=com.docker.swarm.service.name=dummyservice) ``` - Check the contents of the secret files: ```bash docker exec $CID grep -r . /run/secrets ``` ] .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: secrets ## Secrets in practice - Can be (ab)used to hold whole configuration files if needed - If you intend to rotate secret `foo`, call it `foo.N` instead, and map it to `foo` (N can be a serial, a timestamp...) ```bash docker service create --secret source=foo.N,target=foo ... ``` - You can update (remove+add) a secret in a single command: ```bash docker service update ... --secret-rm foo.M --secret-add source=foo.N,target=foo ``` - For more details and examples, [check the documentation](https://docs.docker.com/engine/swarm/secrets/) .debug[[swarm/secrets.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/secrets.md)] --- class: pic .interstitial[] --- name: toc-least-privilege-model class: title Least privilege model .nav[ [Previous section](#toc-secrets-management-and-encryption-at-rest) | [Back to table of contents](#toc-chapter-4) | [Next section](#toc-dealing-with-stateful-services) ] .debug[(automatically generated title slide)] --- # Least privilege model - All the important data is stored in the "Raft log" - Managers nodes have read/write access to this data - Workers nodes have no access to this data - Workers only receive the minimum amount of data that they need: - which services to run - network configuration information for these services - credentials for these services - Compromising a worker node does not give access to the full cluster .debug[[swarm/leastprivilege.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/leastprivilege.md)] --- ## What can I do if I compromise a worker node? - I can enter the containers running on that node - I can access the configuration and credentials used by these containers - I can inspect the network traffic of these containers - I cannot inspect or disrupt the network traffic of other containers (network information is provided by manager nodes; ARP spoofing is not possible) - I cannot infer the topology of the cluster and its number of nodes - I can only learn the IP addresses of the manager nodes .debug[[swarm/leastprivilege.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/leastprivilege.md)] --- ## Guidelines for workload isolation - Define security levels - Define security zones - Put managers in the highest security zone - Enforce workloads of a given security level to run in a given zone - Enforcement can be done with [Authorization Plugins](https://docs.docker.com/engine/extend/plugins_authorization/) .debug[[swarm/leastprivilege.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/leastprivilege.md)] --- ## Learning more about container security .blackbelt[DC17US: Securing Containers, One Patch At A Time ([video](https://www.youtube.com/watch?v=jZSs1RHwcqo&list=PLkA60AVN3hh-biQ6SCtBJ-WVTyBmmYho8&index=4))] .blackbelt[DC17EU: Container-relevant Upstream Kernel Developments ([video](https://dockercon.docker.com/watch/7JQBpvHJwjdW6FKXvMfCK1))] .blackbelt[DC17EU: What Have Syscalls Done for you Lately? ([video](https://dockercon.docker.com/watch/4ZxNyWuwk9JHSxZxgBBi6J))] .debug[[swarm/leastprivilege.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/leastprivilege.md)] --- ## A reminder about *scope* - Out of the box, Docker API access is "all or nothing" - When someone has access to the Docker API, they can access *everything* - If your developers are using the Docker API to deploy on the dev cluster ... ... and the dev cluster is the same as the prod cluster ... ... it means that your devs have access to your production data, passwords, etc. - This can easily be avoided .debug[[swarm/apiscope.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/apiscope.md)] --- ## Fine-grained API access control A few solutions, by increasing order of flexibility: - Use separate clusters for different security perimeters (And different credentials for each cluster) -- - Add an extra layer of abstraction (sudo scripts, hooks, or full-blown PAAS) -- - Enable [authorization plugins] - each API request is vetted by your plugin(s) - by default, the *subject name* in the client TLS certificate is used as user name - example: [user and permission management] in [UCP] [authorization plugins]: https://docs.docker.com/engine/extend/plugins_authorization/ [UCP]: https://docs.docker.com/datacenter/ucp/2.1/guides/ [user and permission management]: https://docs.docker.com/datacenter/ucp/2.1/guides/admin/manage-users/ .debug[[swarm/apiscope.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/apiscope.md)] --- class: pic .interstitial[] --- name: toc-dealing-with-stateful-services class: title Dealing with stateful services .nav[ [Previous section](#toc-least-privilege-model) | [Back to table of contents](#toc-chapter-4) | [Next section](#toc-links-and-resources) ] .debug[(automatically generated title slide)] --- # Dealing with stateful services - First of all, you need to make sure that the data files are on a *volume* - Volumes are host directories that are mounted to the container's filesystem - These host directories can be backed by the ordinary, plain host filesystem ... - ... Or by distributed/networked filesystems - In the latter scenario, in case of node failure, the data is safe elsewhere ... - ... And the container can be restarted on another node without data loss .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Building a stateful service experiment - We will use Redis for this example - We will expose it on port 10000 to access it easily .exercise[ - Start the Redis service: ```bash docker service create --name stateful -p 10000:6379 redis ``` - Check that we can connect to it: ```bash docker run --net host --rm redis redis-cli -p 10000 info server ``` ] .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Accessing our Redis service easily - Typing that whole command is going to be tedious .exercise[ - Define a shell alias to make our lives easier: ```bash alias redis='docker run --net host --rm redis redis-cli -p 10000' ``` - Try it: ```bash redis info server ``` ] .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Basic Redis commands .exercise[ - Check that the `foo` key doesn't exist: ```bash redis get foo ``` - Set it to `bar`: ```bash redis set foo bar ``` - Check that it exists now: ```bash redis get foo ``` ] .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Local volumes vs. global volumes - Global volumes exist in a single namespace - A global volume can be mounted on any node <br/>.small[(bar some restrictions specific to the volume driver in use; e.g. using an EBS-backed volume on a GCE/EC2 mixed cluster)] - Attaching a global volume to a container allows to start the container anywhere <br/>(and retain its data wherever you start it!) - Global volumes require extra *plugins* (Flocker, Portworx...) - Docker doesn't come with a default global volume driver at this point - Therefore, we will fall back on *local volumes* .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Local volumes - We will use the default volume driver, `local` - As the name implies, the `local` volume driver manages *local* volumes - Since local volumes are (duh!) *local*, we need to pin our container to a specific host - We will do that with a *constraint* .exercise[ - Add a placement constraint to our service: ```bash docker service update stateful --constraint-add node.hostname==$HOSTNAME ``` ] .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Where is our data? - If we look for our `foo` key, it's gone! .exercise[ - Check the `foo` key: ```bash redis get foo ``` - Adding a constraint caused the service to be redeployed: ```bash docker service ps stateful ``` ] Note: even if the constraint ends up being a no-op (i.e. not moving the service), the service gets redeployed. This ensures consistent behavior. .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Setting the key again - Since our database was wiped out, let's populate it again .exercise[ - Set `foo` again: ```bash redis set foo bar ``` - Check that it's there: ```bash redis get foo ``` ] .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Updating a service recreates its containers - Let's try to make a trivial update to the service and see what happens .exercise[ - Set a memory limit to our Redis service: ```bash docker service update stateful --limit-memory 100M ``` - Try to get the `foo` key one more time: ```bash redis get foo ``` ] The key is blank again! .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Service volumes are ephemeral by default - Let's highlight what's going on with volumes! .exercise[ - Check the current list of volumes: ```bash docker volume ls ``` - Carry a minor update to our Redis service: ```bash docker service update stateful --limit-memory 200M ``` ] Again: all changes trigger the creation of a new task, and therefore a replacement of the existing container; even when it is not strictly technically necessary. .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## The data is gone again - What happened to our data? .exercise[ - The list of volumes is slightly different: ```bash docker volume ls ``` ] (You should see one extra volume.) .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Assigning a persistent volume to the container - Let's add an explicit volume mount to our service, referencing a named volume .exercise[ - Update the service with a volume mount: ```bash docker service update stateful \ --mount-add type=volume,source=foobarstore,target=/data ``` - Check the new volume list: ```bash docker volume ls ``` ] Note: the `local` volume driver automatically creates volumes. .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Checking that data is now persisted correctly .exercise[ - Store something in the `foo` key: ```bash redis set foo barbar ``` - Update the service with yet another trivial change: ```bash docker service update stateful --limit-memory 300M ``` - Check that `foo` is still set: ```bash redis get foo ``` ] .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Recap - The service must commit its state to disk when being shutdown.red[*] (Shutdown = being sent a `TERM` signal) - The state must be written on files located on a volume - That volume must be specified to be persistent - If using a local volume, the service must also be pinned to a specific node (And losing that node means losing the data, unless there are other backups) .footnote[<br/> .red[*]If you customize Redis configuration, make sure you persist data correctly! <br/> It's easy to make that mistake — __Trust me!__] .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Cleaning up .exercise[ - Remove the stateful service: ```bash docker service rm stateful ``` - Remove the associated volume: ```bash docker volume rm foobarstore ``` ] Note: we could keep the volume around if we wanted. .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- ## Should I run stateful services in containers? -- Depending whom you ask, they'll tell you: -- - certainly not, heathen! -- - we've been running a few thousands PostgreSQL instances in containers ... <br/>for a few years now ... in production ... is that bad? -- - what's a container? -- Perhaps a better question would be: *"Should I run stateful services?"* -- - is it critical for my business? - is it my value-add? - or should I find somebody else to run them for me? .debug[[swarm/stateful.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/swarm/stateful.md)] --- class: title, self-paced Thank you! .debug[[common/thankyou.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/thankyou.md)] --- class: title, in-person That's all folks! <br/> Questions?  .debug[[common/thankyou.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/thankyou.md)] --- class: pic .interstitial[] --- name: toc-links-and-resources class: title Links and resources .nav[ [Previous section](#toc-dealing-with-stateful-services) | [Back to table of contents](#toc-chapter-4) | [Next section](#toc-) ] .debug[(automatically generated title slide)] --- # Links and resources - [Docker Community Slack](https://community.docker.com/registrations/groups/4316) - [Docker Community Forums](https://forums.docker.com/) - [Docker Hub](https://hub.docker.com) - [Docker Blog](http://blog.docker.com/) - [Docker documentation](http://docs.docker.com/) - [Docker on StackOverflow](https://stackoverflow.com/questions/tagged/docker) - [Docker on Twitter](http://twitter.com/docker) - [Play With Docker Hands-On Labs](http://training.play-with-docker.com/) .footnote[These slides (and future updates) are on → http://container.training/] .debug[[common/thankyou.md](https://github.com/jpetazzo/container.training/tree/swarm2017/slides/common/thankyou.md)]